My tech department had a little internal one day idea conference this week. I wanted to keep it from being a programmer fest so I pitched Crowdsource Testing as a topic. Although I knew nothing about it, I had just seen James Whittaker’s STARwest Keynote, and the notion of having non-testers test my product had been bouncing around my brain.

With a buzzword like “crowdsource” I guess I shouldn’t be surprised they picked it. That and maybe I was one of their only submissions.

Due to several personal conflicts I had little time to research, but I did spend about 12 hours alone with my brain in a long car ride. During that time I came up with a few ideas, or at least built on other people’s.

- Would you hang this on your wall?

…only a handful of people raised their hands. But this is a Jackson Pollock! Worth millions! It’s quality art! Maybe software has something in common with art. - Two testing challenges that can probably not be solved by skilled in-house testers are:

- Quality is subjective. (I tip my hat to Michael Bolton and Jerry Weinberg)

- There are an infinite amount of tests to execute. (I tip my hat to James Bach and Robert Sabourin)

- There is a difference between acting like a user and using software. (I tip my hat to James Whittaker). The only way to truly measure software quality is to use the software, as a user. How else can you hit just the right tests?

- Walking through the various test levels: Skilled testers act. Team testers act (this is when non-testers on your product team help you test). User acceptance testing is acting (they are still pretending to do some work). Dogfooders…ah yes! Dogfooders are finally not acting. This is the first level to cross the boundary between pretending and acting. Why not stop here? It’s still weak in the infinite tests area.

- The next level is crowdsource testing. Or is it crowdsourced testing? I see both used frequently. I can think of three ways to implement crowdsource testing:

- Use a crowdsource testing company.

- Do a limited beta release.

- Do a public beta release.

- Is crowdsource testing acting or using? If you outsource your crowdsource testing to a company (e.g., uTest, Mob4hire, TopCoder), now you’re hiring actors again. However, if you do a limited or public beta release, you’re asking people to use your product. See the difference?

- Beta…is a magic word. It’s like stamping “draft” on the report you’re about to give your boss. It also says, this product is alive! New! Exciting! Maybe cutting edge. It’s fixable still. We won’t hang you out to dry. It can only get better!

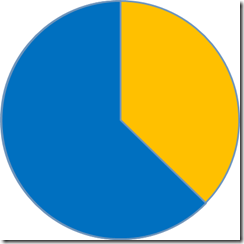

- Who should facilitate crowdsource testing? The skilled in-house tester. Maybe crowdsource testing is just another tool in their toolbox. If they spent this much time executing their own tests…

…maybe instead, they could spend this much time (blue) executing their own tests, and this much time (yellow) collecting test information from their crowd… - How do we get feedback from the crowd? (from least cool to coolest) The crowd calls our tech support team, virtual feedback meetings, we go find the feedback on twitter etc., the crowd provides feedback via tightly integrated feedback options on the product’s UI, we programmatically collect it in a stealthy way.

- How do we enlist the crowd? Via creative spin and marketing (e.g., follow Hipster’s example).

- Which products do we crowdsource test? NOT the mission critical ones. We crowdsource the trivial products like entertainment or social products.

- How far can we take this? Far. We can ask the crowd to build the product too.

- What can we do now?

- BA’s: determine beta candidate features.

- Testers: spend less time testing trivial features. Outsource those to the crowd.

- Programmers: Decouple trivial features from critical features.

- UX Designers: Help users find beta features and provide feedback.

- Managers: Encourage more features to get released earlier.

- Executives: Hire a crowdsource test czar.

There it is in a nutshell. Of course, the live presentation includes a bunch of fun extra stuff like the 1978 Alpo commercial that may have inspired the term “dogfooding”, as well as other theories and silliness.

RSS

RSS

Thanks for shedding some light on crowdsourced testing Eric! I have been talking about crowdsourcing as but one of the powerful Testing in Production (TiP) techniques. For example see slides 47-50 of my TiP Talk here: http://bit.ly/seth_STP_slides.

You mention three ways to implement crowdsource testing, let me add a fourth. You can solicit real users and pay them. The example I talk about in my talk is a small start-up that used a combination of Amazon Mechanical Turk and Survey Monkey to pay a small fee to have users perform real tasks on their nascent website.

You also talk about collecting telemetry on what users are actually doing. This is a powerful technique and can be leveraged in controlled online experimentation (AB Testing) as well as user performance testing where we can collect performance metrics for real users and real environments.

"difference between acting like a user and using software" - excellent. In one of the projects we took the product home plugged it in, asked our family members and friends to use it. The product owners were surprised but happy when they found the report after this exercise. Some bugs required a change in the usability flows. Thumbs up to crowd sourcing

Well surprisingly Whitteker himself was not pleased by crowd testing just a year ago. What do you think if crowdtesting should be strictly run by expirienced professional testers or by anyone who has computer and can report simple bug. Isn't headache for crowdtesting clients to review crappy primitive bugs? I know only one place bugpub.com where testers are choosen based on real expirience