After seeing Mark Vasko’s CAST 2011 lightning talk, I was inspired to create a Test Idea Wall with one of my project teams. Much to my surprise, the damn thing actually works.

When I’m taking a break from testing something, I pause as I walk past the Test Idea Wall. My brain jumps around between the pictures and discovers gaps in my test coverage.

Our wall is incredibly simple, but so far it contains the main test idea triggers we forget. For example, the picture of the pad lock reminds us to consider locking scenarios, something that is often just an afterthought, but always gets us fruitful information:

- What if we run the same tests as a read-only user?

- What if we run the same tests while another user has our lock?

- What if we run the same tests while the system has our lock?

- What if certain users should not have this permission?

Thanks, Mark!

Crowdsource Testing – Acting vs. Using

3 comments Posted by Eric Jacobson at Thursday, December 15, 2011My tech department had a little internal one day idea conference this week. I wanted to keep it from being a programmer fest so I pitched Crowdsource Testing as a topic. Although I knew nothing about it, I had just seen James Whittaker’s STARwest Keynote, and the notion of having non-testers test my product had been bouncing around my brain.

With a buzzword like “crowdsource” I guess I shouldn’t be surprised they picked it. That and maybe I was one of their only submissions.

Due to several personal conflicts I had little time to research, but I did spend about 12 hours alone with my brain in a long car ride. During that time I came up with a few ideas, or at least built on other people’s.

- Would you hang this on your wall?

…only a handful of people raised their hands. But this is a Jackson Pollock! Worth millions! It’s quality art! Maybe software has something in common with art. - Two testing challenges that can probably not be solved by skilled in-house testers are:

- Quality is subjective. (I tip my hat to Michael Bolton and Jerry Weinberg)

- There are an infinite amount of tests to execute. (I tip my hat to James Bach and Robert Sabourin)

- There is a difference between acting like a user and using software. (I tip my hat to James Whittaker). The only way to truly measure software quality is to use the software, as a user. How else can you hit just the right tests?

- Walking through the various test levels: Skilled testers act. Team testers act (this is when non-testers on your product team help you test). User acceptance testing is acting (they are still pretending to do some work). Dogfooders…ah yes! Dogfooders are finally not acting. This is the first level to cross the boundary between pretending and acting. Why not stop here? It’s still weak in the infinite tests area.

- The next level is crowdsource testing. Or is it crowdsourced testing? I see both used frequently. I can think of three ways to implement crowdsource testing:

- Use a crowdsource testing company.

- Do a limited beta release.

- Do a public beta release.

- Is crowdsource testing acting or using? If you outsource your crowdsource testing to a company (e.g., uTest, Mob4hire, TopCoder), now you’re hiring actors again. However, if you do a limited or public beta release, you’re asking people to use your product. See the difference?

- Beta…is a magic word. It’s like stamping “draft” on the report you’re about to give your boss. It also says, this product is alive! New! Exciting! Maybe cutting edge. It’s fixable still. We won’t hang you out to dry. It can only get better!

- Who should facilitate crowdsource testing? The skilled in-house tester. Maybe crowdsource testing is just another tool in their toolbox. If they spent this much time executing their own tests…

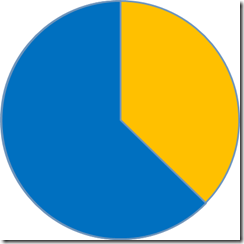

…maybe instead, they could spend this much time (blue) executing their own tests, and this much time (yellow) collecting test information from their crowd… - How do we get feedback from the crowd? (from least cool to coolest) The crowd calls our tech support team, virtual feedback meetings, we go find the feedback on twitter etc., the crowd provides feedback via tightly integrated feedback options on the product’s UI, we programmatically collect it in a stealthy way.

- How do we enlist the crowd? Via creative spin and marketing (e.g., follow Hipster’s example).

- Which products do we crowdsource test? NOT the mission critical ones. We crowdsource the trivial products like entertainment or social products.

- How far can we take this? Far. We can ask the crowd to build the product too.

- What can we do now?

- BA’s: determine beta candidate features.

- Testers: spend less time testing trivial features. Outsource those to the crowd.

- Programmers: Decouple trivial features from critical features.

- UX Designers: Help users find beta features and provide feedback.

- Managers: Encourage more features to get released earlier.

- Executives: Hire a crowdsource test czar.

There it is in a nutshell. Of course, the live presentation includes a bunch of fun extra stuff like the 1978 Alpo commercial that may have inspired the term “dogfooding”, as well as other theories and silliness.

STARwest 2011 Lightning Talk Keynotes

2 comments Posted by Eric Jacobson at Monday, November 28, 2011If there were a testing conference that consisted of only lightning talks, I would be the first to sign up. Maybe I have a short attention span or something. STARwest’s spin on lightning talks is “Lightning Talk Keynotes” in which (I assume) Lee Copeland handpicks lightning talk presenters. He did not disappoint. Here is my summary:

Michael Bolton

Michael started with RST’s formal definition of a Bug: “anything that threatens the value of the product”. Then he shared his definition of an Issue: “anything that threatens the value of the testing” (e.g., a tool I need, a skill I need). Finally, Bolton suggested, maybe issues are more important than bugs, because issues give bugs a place to hide.

Hans Buwalda

His main point was, “It’s the test, stupid”. Hans suggested, when test automation takes place on teams, it’s important to separate the testers from the test automation engineers. Don’t let the engineers dominate the process because no matter how fancy the programming is, what it tests is still more important.

Lee Copeland

Lee asked his wife why she cuts the ends off her roasts, and lays them against the long side of the roast, before cooking them. She wasn’t sure because she learned it from her mother. So they asked her mother why she cuts the ends off her roasts. Her mother had the same answer so they asked her grandmother. Her grandmother said, “Oh, that’s because my oven is too narrow to fit the whole roast in it”.

Lee suggested most processes begin with “if…then” statements (e.g., if the software is difficult to update, then we need specs). But over time, the “if” part fades away. Finally, Lee half-seriously suggested all processes should have a sunset clause.

Dale Emory

If an expert witness makes a single error, out of an otherwise perfect testimony, it raises doubts in the juror's minds. If 1 out of 800 automated tests throws a false positive, people accept that. If it keeps happening, people loose faith in the tests and stop using them. Dales suggests the following prioritized way of preventing the above:

- Remove the test.

- Try to fix the test.

- If you are sure it works properly, add it back to the suite.

In summary, Dale suggests, reliability of the test suite is more important then coverage.

Julie Gardiner

Julie showed a picture of one of those sliding piece puzzles; the kind with one empty slot so adjacent pieces can slide into it. She pointed out that this puzzle could not be solved if it weren’t for the empty slot.

Julie suggested slack is essential for improvement, innovation, and morale and that teams may want to stop striving for 100% efficiency.

Julie calls this “the myth of 100% efficiency”.

Note: as a fun gimmicky add-on, she offered to give said puzzle to anyone that went up to her after her lightning talk to discuss it with her. I got one!

Bob Galen

Sorry, I didn’t take any notes other than “You know you’ve arrived when people are pulling you”. Either it was so compelling I didn’t have time to take notes, or I missed the take-away.

Dorothy Graham

Per Dorothy, coverage is a measure of some aspect of thoroughness. 100% coverage does not mean running 100% of all the tests we’ve thought of. Coverage is not a relationship between the tests and themselves. Instead, it is a relationship between the tests and the product under test.

Dorothy suggests, whenever you hear “coverage”, ask “Of what?”.

Jeff Payne

Jeff began by suggesting, “If you’re a tester and don’t know how to code in 5 years, you’ll be out of a job”. He said 80% of all tester job posts require coding and this is because we need more automated tests.

Martin Pol

My notes are brief here but I believe Martin was suggesting, in the near future, testers will need to focus more on non-functional tests. The example Martin gave is the cloud; if the cloud goes down, your services (dependent on the cloud) will be unavailable. This is an example of an extra dependency that comes with using future technology (i.e., the cloud).

STARwest’s “Test Estimation & the Art of Negotiation”

2 comments Posted by Eric Jacobson at Monday, November 21, 2011I’m going back through my STARwest notes and I want to blog about a few other sessions I enjoyed.

One of those was Nancy Kelln and Lynn McKee’s, “Test Estimation and the Art of Negotiation”, in which they suggested a new way to answer the popular question…

How long will testing take?

I figured Nancy and Lynn would have some fresh and interesting things to say about test estimation since they hosted the Calgary Perspectives on Software Testing workshop on test estimation this year. I was right.

In this session, they tried to help us get beyond using what one guy in the audience referred to as a SWAG (Silly Wild-Ass Guess).

- Nancy and Lynn pointed to the challenge of dependencies. Testers sometimes attempt to deal with dependencies by padding their estimates. This won’t work for Black Swans, which are the unknown unknowns; those events cannot be planned for or referenced.

- The second challenge is optimism. We think we can do more than we can. Nancy and Lynn demonstrated an example of the impact of bugs on testing time. As more bugs are discovered, and their fixes need to be verified, more time is taken from new testing, time that is often under estimated.

- The third challenge is identifying what testing means to each person. Does it include planning? Reporting? Lynn suggested to try to estimate how much fun one would have at Disneyland. Does the trip start when I leave my house, get to California, enter the park, or get on a ride? When does it end?

Eventually, Lynn and Nancy suggested the best estimate is no estimate at all.

Instead, it is a negotiation with the business (or your manager). When someone asks, “how long will testing take?”, maybe you should explain that testing is not a phase. Testing is exploration, discovery, learning, and reporting. Testing could end when there are no more interesting questions to answer but stopping testing is a business decision.

They further suggested that testers have a responsibility to help the business understand the trade-offs; if quality expectations are high, it may require more testing than if they are lower. Change your test approach to fit the needs. If you only have one day to test, you can still do your best to find the most mission critical information that you can in one day.

Apart from the session content, Lynn and Nancy were awesome presenters. They used volunteers from the audience for role plays, cartoons, brainstorms, and other interactive techniques to keep the session engaging. I heard they proposed a full day session for STPCon Spring. That would be a fun day.

Thanks Nancy and Lynn!

MORE BATS – Or Build Your Own Test Report Heuristic

2 comments Posted by Eric Jacobson at Monday, November 07, 2011When someone walks up to your desk and asks, “How’s the testing going?”, a good answer depends on remembering to tell that person the right things.

After reading Michael Kelly’s post on coming up with a heuristic (model), I felt inspired to tweak his MCOASTER test reporting heuristic model. I tried using it but it felt awkward. I wanted one that was easier for me to remember, with slightly different trigger words, ordered better for my context. It was fun. Here is what I did:

- I listed all the words that described things I may want to cover in a test report. Some words were the same as Michael Kelly’s but some were different (e.g., “bugs”).

- Then I took the first letter of each of my words and plugged them into Wordsmith.org’s anagram solver.

- Finally, I skimmed through the anagram solver results, until I got to those starting with ‘M’ (I knew I wanted ‘Mission’ to be the first trigger). If my mnemonic didn’t jump out at me, I tweaked the input words slightly using synonyms and repeated step 2.

Two minutes later I settled on MORE BATS. After being an avid caver for some 13 years, it was perfect. When someone asks for a test report, I feel slightly lost at first, until I see MORE BATS. Just like in caving; when deep into the cave I feel lost, but seeing “more bats” is a good sign the entrance is in that general direction, because more bats tend to be near the entrance.

Here’s how it works (as a test report heuristic):

Mission

Obstacles

Risks

Environment

Bugs

Audience

Techniques

Status

“My mission is to test the new calendar control. My only obstacle is finding enough data in the future for some of my scenarios. The risks I’m testing include long range future entries, multiple date ranges, unknown begin dates, and extremely old dates. I’m using the Dev environment because the control is not working in QA. I found two date range bugs. Since this is a programmer (audience) asking me for a test report I will describe my techniques; I’m checking my results in database tableA because the UI is not completed. I think I’ll be done today (status).”

Try to make your own and let me know what you come up with.

The first time I saw James Whittaker was in 2004 at an IIST conference. He dazzled us with live demos of bugs that were found in public software. This included a technique of editing HTML files to change quantity combo box values to negatives, resulting in a credit to one’s VISA card.

And now, fresh into his job as Google’s test engineering director, I was thrilled to see him headlining STARwest’s keynotes with his challenging “All That Testing Is Getting in the Way of Quality” presentation.

After a brief audience survey, James convinced most of us that testers do not get respect in their current roles. Then he kicked us while we were down, by suggesting the reason we lack respect is all the recent software quality “game changers” have been thought of by programmers:

- Today’s software is easier to update and fix problems in (easier than software from 10 years ago).

- Crash recovery – some software can fix itself.

- Reduction of dependencies via standards (e.g., most HTML 5.0 websites work on all browsers now).

- Continuous Builds – quicker availability of builds makes software easier to test but it has nothing to do with testers.

- Initial Code Quality (e.g., TDD, unit tests, peer reviews)

- Convergence of the User and Test Community (e.g., crowd source testing, dog food testing). Per James, “Testers have to act like users, users don’t have to act.”

Following the above were four addition “painful” facts about testing:

- Only the software matters. People care about what was built, not who tested it.

- The value of the testing is the activity of testing, not the artifact. Stop wasting your time creating bug reports and test cases. Start harnessing the testing that already exists (e.g., beta testing).

- The only important artifact is the code. Want your tests to matter? Make them part of the code.

- Bugs don’t count unless they get fixed. Don’t waste time logging bugs. Instead, keep the testers testing.

The common theme here is that programmers are getting better at testing, and testers are not getting better at programming. The reason this should scare testers is, per James:

“It’s only important that testing get done, not who gets it done.”

I agree. And yes, I’m a bit scared.

After a cocky demo of some built in bug reporting tools in a private version of Google Maps, James finally suggested his tip on tester survival; get a specialty and become an expert in some niche of testing (e.g., Security, Internationalization, Accessibility, Privacy) or learn how to code.

The hallway STARwest discussions usually brought up Whittaker’s keynote. However, apart from a few, nearly everyone I encountered did not agree with his message and some even laughed it off. One tester I had lunch with tests a system used by warehouse operators to organize warehouses. He joked that his warehouse users would not be drooling at the opportunity to crowdsource test the next version of WarehouseOrganizer 2.0. In fact, they don’t even want the new version. Another tester remarked that his software was designed to replace manual labor and would likely result in layoffs...dog food testing? Awkward.

Thank you STARwest, for bringing us such a challenging keynote. I thoroughly enjoyed it and think it was an important wake up call for all of us testers. And now I’m off to study my C#!

STARwest’s “Be The Tester Your Dog Thinks You Are”

3 comments Posted by Eric Jacobson at Wednesday, October 12, 2011Last week I had the pleasure (or displeasure) of presenting my first 60 minute track session at a testing conference. STARwest 2011 was one of the best conferences I’ve attended. My next several posts will focus on what I learned. But first, since several have asked, I’ll share my presentation.

There are two reasons why I submitted my presentation proposal in the first place:

- REASON #1: Each conference tends to highlight presenters from Microsoft, Google, Mozilla, Facebook, and other companies whose main product is software. Myself, and I suspect most of the world’s software testers, do not work for companies whose main product is software. Instead, we work in IT shops of banks, entertainment companies, military branches, schools, etc., where we test custom internal operational software, on a shoestring budget.

- REASON #2: The conference sessions not presented by software companies are often presented by independent consultants who always try to send us home convinced we need to shake up our IT shops and force our IT shops to use the next big thing (e.g., Acceptance Test Driven Development).

I’ve attended STAR, STPCon, and IIST conferences. I always feel a bit frustrated as a conference attendee due to the above reasons. What I’m really looking for at conferences are skills or tactics I can decide to use myself, immediately, without having to overhaul my entire team, when I return from a conference. I suspect I am not alone.

Thus, I present to you, “Be The Tester Your Dog Thinks You Are”. Lee Copeland, who graciously accepted my proposal for STARwest, pigeon holed my presentation into a category he calls Personal Leadership. I agree with his taxonomy and believe the ideas in my presentation have helped me love my job as a tester, provide additional value to my development team, and help me advance my career forward. That sounds like personal leadership to me.

The slides below may give you the mile high view but they were designed to be coupled with verbal content and exercises. I apologize but there is no easy way to post this presentation online. I’ve also removed the video because apparently, Google docs won’t convert PPTs > 10MBs.

I’ll be happy to blog in detail on any areas of interest, not already covered in prior posts.

Special thanks to all the great testers who attended my session, participated, and provided feedback throughout. Though I have lots to improve upon, your enthusiasm helped make it bearable. I am also forever grateful to Lee Copeland for having faith in me and giving me the opportunity to present at his STARwest conference.

It’s Okay To Control Your Test Environment

0 comments Posted by Eric Jacobson at Friday, September 30, 2011Sometimes production bug scenarios, are difficult to recreate in a test environment.

One such bug was discovered on one of my projects:

If ItemA is added to a database table after SSIS Package1 executes but before SSIS Package2 executes, an error occurs. Said packages execute at random intervals frequently, to the point where a human cannot determine the exact time to add ItemA, if that human is trying to reproduce the bug. Are you with me?

So what is a tester to do?

The answer is, control your test environment. Disable the packages and manually execute them to run one time, when you want them to.

- Execute SSIS Package1 once.

- Add ItemA to the database table.

- Execute SSIS Package2 once.

A tester on my team argued, “But that’s not realistic.”. She’s right. But if we understand the bug as much as we think we do, we should be able to repeatedly experience the bug and its fix, using our controlled environment. And if we can’t, then we really don’t understand the bug.

This is what it’s all about. Be creative as a tester, simplify things, and control your environment.

The Answer Is “Yes, I can test it”

5 comments Posted by Eric Jacobson at Thursday, September 22, 2011Which of these scenarios will make you a rock star tester? Which will make your job more interesting? Which provides the most flexible way for your team to handle turbulence?

SCENARIO 1

Programmer: We need to refactor something this iteration. It was an oversight and we didn’t think we would have to.

Tester: Can’t this wait until next iteration? If it ain’t broke, don’t fix it.

BA: The users really can’t wait until next iteration for FeatureA. I would like to add FeatureA to the current iteration.

Tester: Okay, which feature would you like to swap it out for?

Programmer: I won’t finish coding this until the last day of the iteration.

Tester: Then we’ll have to move it to a future iteration, I’m not going to have time to test it.

SCENARIO 2

Programmer: We need to refactor something this iteration. It was an oversight and we didn’t think we would have to.

Tester: Yes, I can test it. I’ll need your help, though.

BA: The users really can’t wait until next iteration for FeatureA. I would like to add FeatureA to the current iteration.

Tester: Yes, I can test it. However, these are the only tests I’ll have time to do.

Programmer: I won’t finish coding this until the last day of the iteration.

Tester: Yes, I can test it…as long as we’re okay releasing it with these risks.

Yesterday, a tester on my team gave an excellent presentation for our company’s monthly tester community talk. She talked about what she had learned about effective tester conversation skills in her 15 years as a tester. Here are some of my favorite take-aways:

- When sending an email or starting a verbal conversation to raise an issue, explain what you have done. Prove that you have actually tried concrete things. Explain what concrete things you have done. People appreciate knowing the effort you put into the test and sometimes spot problems.

- Replace pronouns with proper names. Even if the conversation thread’s focus is the Save button, don’t say, “when I click on it”, say “when I click on the Save button.”

- Before logging a bug, give your team the benefit of the doubt. Explain what you observe and see what they say. She said 50% of the time, things she initially suspects as bugs, are not bugs. For example: the BA may have discussed it with the developer and not communicated it back to the team yet.

- Asking questions rocks. You can do it one-on-one or in team meetings. One advantage of doing it in meetings is to spark other people’s brains.

- It’s okay to say “I don’t understand” in meetings. But if, after asking three times, you appear to be the only one in the meeting not understanding, stop asking! Save it for a one-on-one discussion so you don’t develop a reputation of wasting people’s time.

- Don’t speak in generalities. Be precise. Example:

- Don’t say, “nothing works”.

- Instead, pick one or two important things that don’t work, “the invoice totals appear incorrect and the app does not seem to close without using the Task Manager”.

- Know your team. If certain programmers have a rock solid reputation and don’t like being challenged, take some extra time to make sure you are right. Don’t waste their time. It hurts your credibility.

She had some beautiful exercises she took us through to reinforce the above points and others. My favorite was taking an email from a tester, and going through it piece-by-piece to improve it.

I attended Robert Sabourin’s Just-In-Time Testing (JITT) tutorial at CAST2011.

The tutorial centered around the concept of “test ideas”. According to Rob, a test idea is “the essence of the test, but not enough to do the test”. One should get the details when they actually do the test. A test idea should be roughly the size of a Tweet. Rob believes one should begin collecting test ideas “as soon as you can smell a project coming, and don’t stop until the project is live”.

The notion of getting the test details when you do the test makes complete sense to me and I believe this is the approach I use most often. In Rapid Software Testing, we called them “test fragments” instead of “test ideas”. James Bach explains it best, “Scripted (detailed) testing is like playing 20 questions and writing out all the questions in advance.” …stop and think about that for a second. Bach nails it for me every time!

We discussed test idea sources (e.g., state models, requirements, failure modes, mind maps, soap operas, data flow). These sources will leave you with loads of test ideas, certainly more than you will have time to execute. Thus, it’s important to agree on a definition of quality, and use that definition to prioritize your test ideas.

As a group, we voted on three definitions of quality:

- “…Conformance to requirements” – businessman and author, Phil B. Crosby

- “Quality is fitness for use” - 20th century management consultant, Joseph Juran

- “Quality is value to some person” - computer scientist, author and teacher, Gerald M. Weinberg

The winning definition was #2, which also become my favorite, switching from my previous favorite, #3. #2 is easier to understand and a bit more specific.

In JITT, the tester should periodically do this:

- Adapt to change.

- Prioritize tests.

- Track progress.

And with each build, the tester should run what Rob calls Smoke Tests and Fast Tests:

- Smoke Tests – The purpose is build integrity. Whether the test passes or fails is less important than if the outcome is consistent. For example, if TestA failed in dev, it may be okay for TestA to fail in QA. That is an interesting idea. But, IMO, one must be careful. It’s pretty easy to make 1000 tests that fail in two environments with different bits.

- Fast Tests – Functional, shallow but broad.

Most of the afternoon was devoted to group exercises in which we were to develop test ideas from the perspective of various functional groups (e.g., stakeholders, programmers, potential users). We used Rob’s colored index card technique to collect the test ideas. For example: red cards are for failure mode tests, green are confirmatory, yellow for “ility” like security and usability, blue was for usage scenarios, etc.

Our tests revolved around a fictitious chocolate wrapping machine and we were provided with a sort of Mind Map spec describing the Wrap-O-Matic’s capabilities.

After the test idea collection brainstorming within each group, we prioritized other groups’ test ideas. The point here was to show us how different test priorities can depending on who you ask. Thus, as testers, speaking to stakeholders and other groups is crucial for test prioritization.

At first, I considered using the colored index card approach on my own projects, but after seeing Rob’s walkthrough of an actual project he used them for, I changed my mind. Rob showed a spreadsheet he created, where he re-wrote all his index card test ideas so he could sort, filter, and prioritize them. He assigned unique IDs to each and several other attributes. Call me crazy, but why not put them in the spreadsheet to begin with…or some other modern computer software program design to help organize.

Overall, the tutorial was a great experience and Rob is always a blast to learn from. His funny videos and outbursts of enthusiasm always hold my attention. His material and ideas are usually practical and generic enough to apply to most test situations.

Thanks, Rob!

CAST2011 Emerging Topics and Lightning Talks

1 comments Posted by Eric Jacobson at Thursday, September 01, 2011After completing my own CAST2011 Emerging Topics track presentation, “Tester Fatigue and How to Combat It”, I stuck around for several other great emerging topics and later returned for the Lightning Talks.

My stand out favorites were:

- Who Will Test The Robots? – This brief talk lasted a minute or two but I really loved the Q&A and have been thinking about it since. The speaker was referred to as T. Chris (and well-known by other testers). One observer answered by suggesting that we’ll need to build robots to test the robots. I realized this question will need to be answered sooner than we think. Especially after the dismal reliability of my Roomba.

- Improv Comedy / Testing Songs – This was my first encounter with the brilliant and creative, Geordie Keitt. He changes the lyrics of classic pop songs to be about testing. You can hear a sampling of his work on his blog. Sandwiched between his musical live performances were several audience participation testing comedy improvs with Geordie, Lanette Creamer, and Michael Bolton. It was bizarre seeing Bolton quickly turn an extension cord into a cheese cutting machine that needed better testing. At some point during this session, Lanette emerged, dressed as a cat (from head to toe) and began singing Geordie’s tester version of Radiohead’s Creep song. This version must have been called, “Scope Creep”. Here is a clip…

- Stuff To Do When I Get Back From CAST2011 – This Lightning Talk was presented by Liz Marley. I found it very classy. This is what Liz said she would do after Cast2011 (these are from my notes so I may have changed them slightly):

- Send a hand-written thank you note to her boss for sending her to CAST2011.

- Review her CAST2011 notes while fresh in her mind.

- Follow up with people she met at CAST2011.

- Sign up for BBST.

- Get involved in Weekend Testing.

- Schedule a presentation in her company, to present what she learned at CAST2011.

- Plan a peer conference.

- Watch videos for CAST2011 talks she missed.

- Make a blog post about what she learned at CAST2011.

- Invite her manager to come to CAST2012.

Thanks, Liz! So far I’ve done five of them. How about you?

- Mark Vasco’s Test Idea Wall – Mark showed us pictures of his team’s Test Idea Wall and explained how it works: take a wall, in a public place, and plaster it with images, phrases, or other things that generate test ideas. Invite your BA’s and Progs to contribute. Example: A picture of a dog driving a car reminds them not to forget to test their drivers. This is fun and valuable and I started one with one of my project teams this week.

- Between Lightning Talks, to fill the dead time, facilitator Paul Holland ripped apart popular testing metrics. He took real, “in-use”, testing metrics from the audience, wrote them on a flip chart, then explained how ridiculous each was. Paul pointed out that “bad metrics cause bad behavior”. Out of some 20 metrics, he concluded that only two were valuable:

- Expected Coverage vs. Actual Coverage

- Number of Bugs Found

Lightning Talks rock!

Don’t Describe The Bug In Your Repro Steps

3 comments Posted by Eric Jacobson at Tuesday, August 30, 2011Want your bug reports to be clear? Don’t tell us about the bug in the repro steps.

If your bug reports include Repro Steps and Results sections, you’re half way to success. However, the other half requires getting the information in the right sections.

People have a hard time with this. Repro steps should be the actions leading up to the bug. But they should not actually describe the bug. The bug should get described in the Results section (i.e., Expected Results vs. Actual Results).

The beauty of these two distinct sections, Repro Steps and Results, is to help us quickly and clearly identify what the bug being reported is. If you include the bug within the repro steps, our brains have to start wondering if it is a condition necessary to lead up to the bug, if it is the bug, if it is some unrelated problem, or if the author is just confused.

In addition to missing out on clarity, you also create extra work for yourself and the reader by describing the bug twice.

Don’t Do This:

Repro Steps:

- Create a new order.

- Add an item to your new order.

- Click the Delete button to delete the order.

- The order does not delete.

Expected Results: The order deletes.

Actual Results: The order does not delete.

Instead, Do This:

Repro Steps:

- Create a new order.

- Add an item to your new order.

- Click the Delete button to delete the order.

Expected Results: The order deletes.

Actual Results: The order does not delete.

CAST2011 was full of tester heavy weights. Each time I sat down in the main gathering area, I picked a table with people I didn’t know. One of those times I happened to sit down next to thetesteye.com blogger Henrik Emilsson. After enjoying his conversation, I attended his Crafting Our Own Models of Software Quality track session.

CRUSSPIC STMPL (pronounced Krusspic Stemple)…I had heard James Bach mention his quality criteria model mnemonic years ago. CRUSSPIC represents operational quality criteria (i.e., Capability, Reliability, Usability, Security, Scalability, Performance, Installability, Compatibility). STMPL represents development quality criteria (i.e., Supportability, Testability, Maintainability, Portability, Localizability).

Despite how appealing it is to taste the phrase CRUSSPIC STMPL as it exercises the mouth, I had always considered it too abstract to benefit my testing.

Henrik, on the other hand, did not agree. He began his presentation quoting statistician George Edward Pelham Box, who said “…all models are wrong, but some are useful”. Henrik believes we should all create models that are better for our context.

With that, Henrik and his tester colleagues took Bach’s CRUSSPIC STMPL, and over the course of about a year, modified it to their own context. Their current model, CRUCSPIC STMP, is posted here. They spent countless hours reworking what each criterion means to them.

They also swapped out some of the criteria for their own. Of note, was swapping out the 4th “S” for a “C”; Charisma. When you think about some of your favorite software products, charisma probably plays an important role. Is it good-looking? Do you get hooked and have fun? Does the product have a compelling inception story (e.g., Facebook). And to take CRUCSPIC STMP further, Henrik has worked in nested mnemonics. The Charisma quality item descriptors are SPACE HEADS (i.e., Satisfaction, Professionalism, Attractiveness, Curiosity, Entrancement, Hype, Expectancy, Attitude, Directness, Story).

Impressive. But how practical is it?

After Henrik’s presentation, I have to admit, I’m convinced it has enough value for it’s efforts:

- Talking to customers - If quality is value to some person, a quality model can be used to help that person (customers/users) explain which quality criteria is most important to them. This, in turn, will guide the tester.

- Test idea triggers - Per Henrik, a great model inspires you to think for yourself.

- Evaluating test results – If Concurrency is a target quality criterion, did my test tell me anything about performing parallel tasks?

- Talking about testing – Reputation and integrity are important traits for skilled testers. When James Bach or Henrik Emilsson talk about testing, their intimate knowledge of their quality models gives them an air of sophistication that is hard to beat.

Yes, I’m inspired to build a quality criteria model. Thank you, Henrik!

Safety Language and the Preservation of Uncertainty

0 comments Posted by Eric Jacobson at Thursday, August 18, 2011On the third day of CAST2011, Jeff (another tester) and I played the hidden picture exercise with James Bach. We were to uncover a hidden picture, one pixel at a time, uncovering the fewest amount of pixels possible in a short amount of time. This forced us to think about the balance between coverage and gathering enough valuable information to stop. I won’t tell you our approach, but eventually we felt comfortable stating our conclusion. James challenged us to tell him with absolute certainty what the hidden picture was. I responded with, “It appears to be a picture of…”, which to my delight was followed by praise from the master. He remarked on my usage of safety language.

Two days earlier, Michael Bolton’s heady CAST2011 keynote kept me struggling to keep up. He discussed the studies of scientists and thinkers and related their findings to software testing. To introduce the conference theme, he concluded that what we call a fact is actually context dependent.

Since CAST2011 focused on the Context-Driven testing school, we heard a lot about testing schools (or ways of thinking about testing). For example, the Factory testing school believes tests should be scripted and repeatability is important. Some don’t like the label “Factory” but James Bach pulled a red card and argued the label “Factory” can be a good label under the right circumstances (e.g., manufacturing). I never really understood why I should care about testing schools until Bolton (and Bach) explained it this way…

Schools allow us to say “that person is of a different school” rather than “that person is a fool”.

I’ll try to paraphrase a few of Michael’s ideas:

- The world is a complex, messy, variable place. Testers should accept reality and know that some ambiguity and uncertainty will always exist. A testers job is to reduce damaging uncertainty. Testers can at least provide partial answers that may be useful.

- If quality is value to some person, then who should test the quality for various people? This is why it’s important for testers to learn to observe people, and determine what is important to them. Professor of Software Engineeering, Cem Kaner, calls testing a social science for this reason.

- Cultural anthropologist Wade Davis believes people strive by learning to read the world beyond them.

Per Michael, if the above points are true, testers should use safety language. I really liked this part of the lesson. Instead of saying “it fails under these circumstances”, a tester should say “it appears to fail” or “it might fail under these circumstances”. Instead of “the root cause is…”, a tester should say “a root cause is…”. When dealing with an argument, say “I disagree” instead of “you’re wrong” and end by saying “you may be right”. This type of safety language helps to preserve uncertainty and I agree that testers should use it wherever possible.

Be Careful With That CAST2011 Kool-Aid

7 comments Posted by Eric Jacobson at Monday, August 15, 2011After three days at CAST2011, I finally caught up on the #CAST2011 Twitter feed. It was filled with great thoughts and moments from the conference, which reflects most of what I experienced. There was only one thing missing; critical reaction.

In Michael Bolton’s thought provoking keynote, I was reminded of Jerry Weinberg’s famous tester definition, “A tester is someone who knows that things can be different". Well, before posting on what I learned at CAST2011, I’ll take a moment to document four things that could have been different.

Here are some things I got tired of hearing at CAST2011.

- Commercial test automation tools are the root of all evil. Quick Test Pro (QTP) was the one that took the most heat (it always is). Speakers liked to rattle off all the commercial test automation tools they could think of and throw them into a big book-burning-fire. The reason I’m tired of this is I’ve had great success using QTP as a test automation tool and I didn’t use any of its record/playback features. I’ve been using my QTP tests to run some 600 checks for the last 24 iterations and it has worked great. I think any tool can suck when used in the wrong context. These tools can also be effective in the right context.

- Physical things are shiny and cool and new all over again. One presentation was about different colored stickies on a white board instead of organizing work items on a computer (you’ve heard that before). One was about writing tests on different colored index cards. Someone suggested using giant Lego blocks to track progress. In each of these cases, one can see the complexity grow (e.g., let’s stick red things on blue things to indicate the blue things are blocked, one guy entered his index card tests into a spreadsheet so he could sort them). Apparently Einstein used to leave piles of index cards all over his house to write his ideas down on. I’m thinking maybe that was because Einstein didn’t have an iphone. IMO, this obsession with using office supplies to organize complex work is silly. This is why we invented computers after all. Use software!

- Down with PowerPoint! It’s popular these days to be anti-PowerPoint and CAST2011 speakers jumped on that too. Half the speakers I saw did not bother to use PowerPoint. I think this is silly. There is a reason PowerPoint grew to such popularity. It works! I would much rather see an organized presentation that someone took the time to prepare, rather than watching speakers fumble around through their file structure looking for pictures or videos to show, which is what I saw 3 or 4 times. One speaker actually opened PowerPoint, mumbled something about hating it, then didn’t bother to use slideshow mode. So we looked at his slides in design view. PowerPoint presentations can suck, don’t get me wrong, but they can also be brilliant with a little creativity. Just watch some TED talks.

- Traditional scripting testers are wrong. You know the ones, those testers who write exhaustive test details so a guy off the street can execute their tests. Oh wait…maybe you don’t know the ones. Much time was spent criticizing that approach. I’m tired of it because I don’t really think those people are much of a threat these days. I’ve never worked with one and they certainly don’t attend CAST. Why spend time bashing them?

I’m not bitter. I learned from and loved all the speakers. Jon Bach and his brother, James, put on an excellent tester conference that I was extremely grateful to attend. I was just surprised we couldn’t get beyond the above.

Positive posts to come. I promise.

BTW - Speaking of candor… to Jon Bach’s credit, he opened Day2 with a clever self-deprecating bug report addressing conference concerns he had collected on Day1. Things like the name tag print being to small and the breakfast lacking protein. Most of these issues were addressed and he even used PowerPoint to address them. Go Jon! I was very impressed.

In my experiences, 95% of the test cases we write are read and executed only by ourselves. If we generally target ourselves as the audience, we should strive…

…to write the least possible to remember the test.

I like the term “test case fragment” for this. I heard it in my Rapid Software Testing class. On the 5% chance someone asks us about a particular test, we should be able to confidently translate our chicken scratches into a detailed test. That’s my target.

If we agree with the above, couldn’t we improve our efficiency even more by coming up with some type of test case short hand?

For example:

- Instead of “Expected Results”, I write… “E:”.

- Instead of writing statements like “the user who cancelled their order, blocks the user who logged in first”, I prefer to assign variables, “UserA blocks UserB”.

- Instead of multiple steps, I prefer one step test cases. To get there I make some shortcuts.

- Rather than specifying how to get to the start condition (e.g., find existing data vs. create data), I prefer the flexibility of word “with” as in “With a pending order”. How the order becomes pending is not important for this test.

- To quickly remember the spirit of the test, I prefer state-action-expected as in “With a pending order, delete the ordered product. E: user message indicates product no longer exists.”

- Deliberately vague is good enough. When I don’t have enough information to plug in state-action-expected, I capture the vague notion of the test, as in “Attempt to corrupt an order.”. In that case I drop the “E:” because it is understood, right? Expected Result: corruption handled gracefully.

It may be a stretch to call these examples “shorthand”, but I think you get the idea.

What test case short hand do you use?

Run a SQL Trace To Look Behind The Curtain

5 comments Posted by Eric Jacobson at Tuesday, July 26, 2011When we find a bug with repro steps we typically leave the deep investigation to our Progs and move on to the next test. But sometimes it’s fun to squeeze in a little extra investigation if time permits.

My service calls were not returning the expected results so my Prog showed me a cool little trick; how to capture the SQL being passed into my DB for specific service calls. This technique is otherwise known as performing a SQL trace. We ran a SQL trace and with a bit of filtering and some timing, we easily captured the SQL statements being triggered via the called service. We extracted the SQL from the trace and used it to perform direct DB calls. Said isolation helped us find the root problem; a bad join.

Ask your Progs or DBAs which tools they use to perform SQL Traces. We used SQL Profiler and SQL Server 2008 R2. Make sure they show you how to filter down the trace (e.g., only show TSQL, to a certain DB, sent from a specific trigger like a user or service) so you don’t have to sift through all the other transactions.

As testers, we typically open screens in our products and focus on the data that is presented to us. Does it meet our expectations? We are sometimes forced to be patient and wait for said data to present itself. Don’t forget to step back and determine what happens if the data doesn’t get a chance to present itself.

Test Steps:

1. Open a screen or window on the UI. This bug is easier to repro in places where lots of data needs to load on the screen or window. Look for asynchronous data loading (e.g., the user is given control after some data presents itself, while the rest of the data is still being fetched)

2. Close the screen or window before the data has finished loading. Be quick. Be creative; if the screen you opened doesn’t close on command, try its parent.

Expected Results: No errors are thrown. The product handles it gracefully by killing the data load process and releasing the memory.

After reading my Testing for Bug Bucks & Breaking The Rules post, In2v asked, “I was wondering if your team went on with that game and how it turned out. Would you update us?”

Sure. The Bug Bucks game/experiment failed miserably. It sounded good on paper but in practice it was too awkward. When testers found bugs, they didn’t have the heart to ask Progs for Bug Bucks. Go figure…

We like to joke about testers and Progs being caught up in some kind of rivalry. But when we’re actually performing our jobs on serious work, we want each other to succeed. Both tester and Prog had an understanding that nobody was at fault; they were both doing their jobs. When I asked TesterA why he didn’t take any Bug Bucks from ProgA, I was told ProgA would have been in Bug Buck debt (because of all the bugs) but it didn’t seem fair because many of the bugs were team oversights.

We could have adjusted the rules but I could see it was too awkward. And just before management was getting ready to order some Bug Buck goods for purchase, I suggested we pull the plug.

So now I have a stack of worthless Bug Bucks on my desk, and a recurring hope to come up with a more fun way to perform our jobs. I’m open to suggestions…

From this post forward, I will attempt to use the term “Prog” to refer to programmers.

I read a lot of Michael Bolton and I agree, testers are developers too. So are the BAs. Testers, Programmers, and BAs all work together to develop the product. We all work on a software development team.

Before I understood the above, I used the word “Dev” as short hand for “developer” (meaning programmer). Now everybody on my development team says “Dev” (to reference programmers). It has been a struggle to change my team culture to get everyone to call them “programmers”. I’m completely failing and almost ready to switch back to “Dev”, myself. I don’t much like the word, “programmer”…too many syllables and letters.

Thus, I give it one last attempt. “Prog” is perfect! Please help me popularize this term. It’s clearly short for “programmer”, easy to spell, and fun to say. It also reminds me of “frog”, which is fitting because some progs are like frogs. They sit all day waiting for us to give them bugs.

We have a recurring conversation on both my project teams. Some Testers, Programmers, BAs believe certain work items are “testable” while others are not. For example, some testers believe a service is not “testable” until its UI component is complete. I’m sure most readers of this blog would disagree.

A more extreme example of a work item, believed by some to not be “testable”, is a work item for Programmer_A to review Programmer_B’s code. However, there are several ways to test that, right?

- Ask Programmer_A if they reviewed Programmer_B’s code. Did they find problems? Did they make suggestions? Did Programmer_B follow coding standards?

- Attend the review session.

- Install tracking software on Programmer_A’s PC that programmatically determines if said code was opened and navigated appropriately for a human to review.

- Ask Programmer_B what feedback they received from Programmer_A.

IMO, everything is testable to some extent. But that doesn’t mean everything should be tested. These are two completely different things. I don’t test everything I can test. I test everything I should test.

I firmly believe a skilled tester should have the freedom to decide which things they will spend time testing and when. In some cases it may make more sense to wait and test the service indirectly via the UI. In some cases it may make sense to verify that a programmer code review has occurred. But said decision should be made by the tester based on their available time and queue of other things to test.

We don’t need no stinkin’ “testable” flag. Everything is testable. Trust the tester.

Testers, Let’s Get Our Bug Language Correct

0 comments Posted by Eric Jacobson at Thursday, June 16, 2011Hey Testers, let’s start paying more attention to our bug language. If we start speaking properly, maybe the rest of the team will join in.

Bug vs. Bug Report:

We can start by noting the distinction between a bug and a bug report. When someone on the team says, “go write a bug for this”, what they really mean is “go write a bug report for this”. Right? They are NOT requesting that someone open the source code and actually write a logic error.

Bug vs. Bug Fix:

“Did you release the bug?”. They are either asking “did you release the actual bug to some environment?” or “did you release the bug fix?”.

Missing Context:

“Did you finish the bug?”. I hear this frequently. It could mean “did you finish fixing the bug?” or it could mean “did you finish logging the bug report?” or it could mean “did you finish testing the bug fix?”.

Bug State Ambiguity:

“I tested the bug”. Normally this means “I tested the bug fix.” However, sometimes it means “I reproduced the bug.”…as in “I tested to see if the bug still occurs”.

It only takes an instant to tack the word “fix” or “report” onto the word “bug”. Give it a try.

A fun and proud moment for me. Respected tester, Matt Heusser, interviewed me for his This-Week-In-Software-Testing podcast on Software Test Professionals. It was scary because there was no [Backspace] key to erase anything I wished I hadn’t said.

I talked a bit about the transition from tester to test manager, what inspires testers, and some other stuff. It was truly an honor for me.

The four most recent podcasts are available free, although you may have to register for a basic (free) account. However, I highly recommend buying the $100 membership to unlock all 49 (and counting) of these excellent podcasts. I complained at first but after hearing Matt’s interviews with James Bach, Jerry Weinberg, Cem Kaner, and all the other great tester/thinkers, it was money well spent. The production is top notch and listening to Matt’s testing ramblings on each episode is usually as interesting as the interview. There are no podcasts available like these anywhere.

Keep up the great work Matt and team! And keep the podcasts coming!

Keeping Up With Bugs In Multiple Iterations

5 comments Posted by Eric Jacobson at Monday, June 06, 2011We all have different versions of our product on different environments, right? For example: If Iteration 10 is in Production, Iteration 11 is in one or more QA environments. When bugs exist in both Iterations, we have BIMIs (Bugs-In-Multiple-Iterations).

One of my project teams just found a gap in our process that resulted in a BIMI hitching a ride all the way to production. That means our users found a bug, we fixed it, and then our users found the same bug four weeks later (and then we fixed it again!). Our process for handling bugs had always been to log one bug report per bug. Here is the problem.

- Let’s say we have a production bug (in Iteration 10).

- Said bug gets a bug report logged.

- Our bug gets fixed and tested in our post-production environment (i.e., test environment with same bits as production).

- Finally, the fix deploys to production and all is well, right? The bug report status changes to “Closed”.

- Now we can get back to testing Iteration 11.

What did we forget?

…well it’s probably not clear from my narrative but our process gap is that the above bug fix code never got deployed to Iteration 11 and the testers didn’t test for it because “Closed” bugs are already fixed in prod, and thus, off the testers’ radar.

If our product was feasible to automate, we could have added a new test to our automation suite to cover this. But in our mostly manual process, we have to remember to test for BIMIs. The fact is, the same bug fix can be Verified in one iteration and Failed in another. The bug can take a different path in each environment or, like in my case, fail to deploy to the expected environment at all.

This iteration we are experimenting with a solution. For BIMIs, we are making a separate copy of the bug report and calling it a “clone”. This may fly in the face of leaner documentation teams but we think it’s a good idea based on our history.

What’s your solution for making sure bug fixes make it into the next build?

Non-ETL Data Warehouse Tests You Can Do

2 comments Posted by Eric Jacobson at Thursday, June 02, 2011Your mission is to test a new data warehouse table before its ETL process is even set up. Here are some ideas:

Start with the table structure. You can do this before the table even has data.

- If you’ve got design specs, start with the basics;

- Do the expected columns exist?

- Are the column names correct?

- Are the data types correct?

- Is the null handling type correct?

- Do the columns logically fit the business needs? This was already discussed during design. Even if you attended the design, you may know more now. Look at each column again and visualize data values, asking yourself if they seem appropriate. You’ll need business domain knowledge for this.

- Build your own query that creates the exact same table and populates it with correct values. Don’t look at the data warehouse source queries! If you do, you may trick yourself into thinking the programmer must be correct.

Once you have data in your table you can really get going.

- Compare the record set your query produced with that of the data warehouse table. This is where 70% of your bugs are discovered.

- Are the row counts the same?

- Are the data values the same? This is your bread and butter test. This comparison should be done programmatically via an automated test so you can check millions of columns & rows (see my Automating Data Warehouse Tests post). Another option would be to use a diff tool like DiffMerge. A third option, just spot check each table manually.

- Are there any interesting columns? If so, examine them closely. This is where 20% of your bugs are hiding. This testing can not be automated. Look at each column and think about the variety of record scenarios that may occur in the source applications; ask yourself if the fields in the target make sense and support those scenarios.

- Columns that just display text strings like names are not all that interesting because they are difficult to screw up. Columns that are calculated are more interesting. Are the calculations correct?

- Did the design specify a data type change between the source and the target? Maybe an integer needed to be changed to a bit to simplify data…was it converted properly? Do the new values make sense to the business?

- How is corrupt source data handled? Does the source DB have orphaned records or referential integrity problems? Is this handled gracefully? Maybe the data warehouse needs to say “Not Found” for some values.

- Build a user report based on the new data warehouse table. Do you have a trusted production report from the transactional DB? Rebuild it using the data warehouse and run a diff between the two.

What am I missing?

Sometimes, the most feasible way to test something, is to let it soak in an active test environment for several weeks. Examples:

- No repro steps but general product usage causes data corruption. We think we fixed it. Release the fix to an active test environment, let it soak, and periodically check for data corruption.

- A scheduled job runs every hour to perform some updates on our product. We tested the hourly job, now let’s let it run for two weeks in an active test environment. We expect each hourly run to be successful.

Per Google, soak testing involves observing behavior whilst under load for an extended period of time. In my case, load is normally a handful of human testers, as opposed to a large programmatic load of thousands. Nevertheless, the term is finally catching on within my product teams.

Who cares about the term? I like it because it honestly describes the tester effort, which is very little. It does not mislead the team into thinking testers are spending much time investigating something. It’s almost like not testing. But yet, we still plan to observe from time to time and eventually make an assessment of success or failure.

Be sure to over-annunciate the “k” in “soak”. People on my team thought I was saying “soap” test. I’m not sure what a soap test is…but I’m sure it exists too!

Break Up Your Testing Into Sessions And Breaks

2 comments Posted by Eric Jacobson at Tuesday, May 10, 2011The Proceedings of the National Academy of Sciences just published a study, “Extraneous factors in judicial decisions”, that finds decision making is mentally taxing and when people are forced to continually make difficult decisions, they get tired and begin opting for the easiest decision.

Eight parole board judges were observed for 10 months, as they ruled whether or not to grant prisoners parole. The study noticed a trend. Near the end of work periods, prisoners being granted parole dropped significantly. The decision of granting parole takes much longer to explain and involves more work than the decision to deny parole.

(For those of you reading my blog from prison, try to get your parole hearing scheduled first thing in the morning or right after lunch.)

What does this remind you of?

Testing! I don’t have the ambition to perform said study on test teams, but I have certainly experienced the same pattern. I’m guessing fewer bugs get logged latter in the day. The decision that you found a bug is a much more difficult decision than denial. Deciding you found a bug means investigating, logging a report, convincing people sometimes, testing the fix, regression testing what broke, etc.

I wrote about Tester Fatigue and suggested solutions in How To Combat Tester Fatigue. But according to the above study, taking breaks from testing is paramount. Therefore, I will now head downstairs for some frozen yogurt.

Don’t Test It #2 – Programmer-Logged Bugs

1 comments Posted by Eric Jacobson at Monday, May 02, 2011When programmers log bugs, us testers are grateful of course. But when programmer-logged bugs travel down their normal work flow and fall into our laps to verify, we’re sometimes befuddled…

”Hey! Where are the repro steps? How can I simulate that the toolbar container being supplied is not found in the collection of merged toolbars?”

I used to insist that every bug fix be tested by a tester. No exceptions! Some of these programmer-logged bugs were so technical, I had to hold the programmer’s hand through my entire test and test it the same way the programmer already tested it. This is bad because my test would not find out anything new. Later I realized I’m not only wasting the programmer’s time, I’m also wasting my time; from other new tests I could be executing.

Sometimes, it’s still good to waste time for the sake of understanding but don’t make it a hard and fast rule for everything. Instead, you may want to do as follows:

- Ask the programmer how they fixed it and tested their fix. Does it sound reasonable?

- Ensure the critical regression tests will be run in the patched module, before production deployment.

Then rubber stamp the bug and spend your time where you can be more helpful.

What Testers Don’t Like About Their Jobs

5 comments Posted by Eric Jacobson at Monday, April 18, 2011I did a Force Field Analysis brainstorm session with my team. We wrote down what we like and don’t like about our jobs as testers. Then, in the “didn’t like” column, we circled the items we felt we had control over changing. Here are what my testers and I don’t like about our jobs.

Items We May Not Have Control Over:

- When asked what needs to be tested as a result of a five minute code change, programmers often say “test everything”.

- Stressful deadlines.

- Working extra hours.

- Test time is not adequately considered by Programmers/BAs when determining iteration work. Velocity does not appear to matter to team.

Items We May Have Control Over:

- Testers don’t have a way to show the team what they are working on. Our project task boards have a column/status for “Open”, “In Development”, “Developed”, and “Tested”. It’s pretty easy to look under the “In Development” column to see what programmers are working on. But after that, stuff tends to bunch up under the “Developed” column. Even though their may be 10 Features/Stories in “Developed”, the testers may only be working on two of them. A side affect is testers having to constantly answer the question “What are you working on?”...my testers hate that question.

- Testers don’t know each other’s test skills or subject matter expertise. We have some 20 project teams in my department. Most of the products interact. Some are more technical to test than others. Let’s say you’re testing ProductA and you need help understanding its interface with ProductB. Which tester is your oracle? Let’s say you are testing web services for the first time and you’re not sure how to do this. Which tester is really good at testing web services and can help you get started?

- Testers lose momentum when asked to change priorities or switch testing tasks. A programming manager once told me, “each time you interrupt a programmer it takes them 20 minutes to catch back up”. Testers experience the same interruption productivity loss, but arguably, to a larger degree. It is annoying to execute tests in half-baked environments, while following new bugs that may or may not be related, along the way.

We will have a follow-up brainstorm on ways to deal with the above. I’ll post the results.

During a Support Team/Test Team collaboration, I was coaching a technical support team member through logging a bug. I suggested my usual template.

Repro Steps:

1. Do this.

2. Do that.

Expected Results: Something happens.

Actual Results: Something does not happen.

Afterwards, I said “That’s it.” She looked over the Spartan bug report and was reluctant to let it go. she said, “It looks too bare bones.” She wondered if we should add screen captures and more commentary. IMO, when a bug has repro steps, that’s all you need. And the fewer the better! Sure, a picture is worth a thousand words. But sometimes it only takes about 14.

If you’ve ever participated in bug triage meetings, you’ll probably agree. People love to fill bug reports with non-essential comments that obscure the spirit of the bug and discourage anyone from reading it.

“After those steps I tried it again and got the same error.”

“Maybe it is failing because I am doing this instead of that.”

“I remember it used to not throw an error, but now it does.”

“We could fix it by doing this instead of that.”

It’s a bug report, stupid. Report the bug and get back to testing. Opinions and essays are for blogs.

…Praise for the simple, bare bone bugs.

Sounds too good to be true, huh?

One of my programmers is cool enough to request a code review with me when something critical is at stake. I can’t really read code and he knows it. So why does he keep suggesting we do code reviews?

He realizes said activity will force him to explain his code at a translated-to-layman-terms-level. Occasionally, I’ll ask questions; “Why do you need that statement?”, “What if the code flow takes an unexpected path?”. But often I start to daydream…not about my weekend or other non-work things but about a previous statement the programmer made. My brain gets hung up trying to grok the code. And while I’m lost in thought, the programmer keeps zooming through their code, until…

…all of a sudden, the magic happens.

The programmer says, “Hold on…I see a mistake”. It happens every time! I kid you not! A different programmer on my team found two errors while explaining his unit tests to me Monday.

Now I’m not suggesting testers should daydream their way through code reviews. And of course, a tester capable of actually reading/understanding code is more valuable here. But I am suggesting this:

If you’re a tester who feels too uncomfortable or inadequate to perform a code review with your programmers, remember, all you have to do is listen and occasionally ask dumb questions. And even if you’re bored to death looking at someone’s code, and you fall into a daydream, who knows? You may have indirectly helped your programmer save the world.

Automating Change Data Capture Data Warehouse Tests

1 comments Posted by Eric Jacobson at Tuesday, March 29, 2011Our Data Warehouse uses Change Data Capture (CDC) to keep its tables current. After collaborating with one of the programmers we came up with a pretty cool automated test template that has allowed our non-programmer testers to successfully write their own automated tests for CDC.

We stuck to the same pattern as the tests I describe in my Automating Data Warehouse Tests post. Testers can copy/paste tests and only need to update the SQL statements, parameters, and variables. An example of our test is below. If you can’t read C#, just read the comments (they begin with //).

BTW – if you want a test like this but are not sure how to write, just ask your programmers to write it for you. Programmers love to help with this kind of thing. In fact, they will probably improve upon my test.

Happy Data Warehouse Testing!

[TestMethod]

public void VerifyCDCUpdate_FactOrder()

{//get some data from the source

var fields = DataSource.ExecuteReader(@"

SELECT TOP (1) OrderID, OrderName

FROM Database.dbo.tblOrder");

var OrderID = (int)fields[0];

var originalValue = (string)fields[1];

//make sure the above data is currently in the Data Warehouse

var DWMatch = new DataSource("SELECT OrderID, OrderName FROM FactOrder WHERE OrderID = @OrderID and OrderName = @OrderName",

new SqlParameter("@OrderID", OrderID),

new SqlParameter("@OrderName", originalValue));

//fail test is data does not match. This is still part of the test setup.

DataSourceAssert.IsNotEmpty(DWMatch, "The value in the datawarehouse should match the original query");

try

{

// Set a field in the source database to something else

var newValue = "CDCTest";

DataSource.ExecuteNonQuery(

@"UPDATE Database.dbo.tblOrder SET OrderName = @NewValue WHERE OrderID = @OrderID",

new SqlParameter("@NewValue", newValue),

new SqlParameter("@OrderID", OrderID));

var startTime = DateTime.Now;

var valueInDW = originalValue;

while (DateTime.Now.Subtract(startTime).Minutes < 10)

{

// Verify the value in the source database is still what we set it to, otherwise the test is invalid

var updatedValueInSource = DataSource.ExecuteScalar<string>(@"SELECT OrderName FROM Database.dbo.tblOrder WHERE OrderID = @OrderID",

new SqlParameter("@OrderID", OrderID));

if (updatedValueInSource != newValue)

Assert.Inconclusive("The value {0} was expected in the source, but {1} was found. Cannot complete test", newValue, updatedValueInSource);

//start checking the target to see if it has updated. Wait up to 10 minutes (CDC runs every five minutes). This is the main check for this test. This is really what we care about.

valueInDW = DataSource.ExecuteScalar<string>(@"SELECT OrderName FROM FactOrder WHERE OrderID = @OrderID",

new SqlParameter("@OrderID", OrderID));

if (valueInDW == newValue)

break;

Thread.Sleep(TimeSpan.FromSeconds(30));

}

if (valueInDW != newValue)

Assert.Fail("The value {0} was expected in DW, but {1} was found after waiting for 10 minutes", newValue, valueInDW);

}

finally

{

// Set the value in the source database back to the original

// This will happen even if the test failes

DataSource.ExecuteNonQuery(

@"UPDATE Database.dbo.tblOrder SET OrderName = @OriginalValue WHERE OrderID = @OrderID",

new SqlParameter("@OriginalValue", originalValue),

new SqlParameter("@OrderID", OrderID));

}

}

Don’t Test It #1 - Crisis In Production

9 comments Posted by Eric Jacobson at Friday, March 25, 2011I find it belittling…the notion that everything must be tested by a tester before it goes to production. It means we test because of a procedure rather than to provide information that is valuable to somebody.

This morning our customers submitted a large job to one of our software products for processing. The processed solution was too large for our product’s output. So the users called support saying they were dead in the water and on the verge of missing a critical deadline. We had one hour to deliver the fix to production.

The fix, itself, was the easy part. A parameter needed its value increased. The developer performed said fix then whipped up a quick programmatic test to ensure the new parameter value would support the users’ large job. Per our process, the next stop was supposed to be QA. Given the following information I attempted to bypass QA and release the change straight to production:

- Testers would not be able to generate a large enough job, resembling that in production, in the available time given.

- There was no QA environment mirroring production bits and data at this time. It would have been impossible to stand one up before the one hour deadline.

- The risk of us breaking production by increasing said parameter was insignificant because production was already non-usable (i.e., it would be nearly impossible for this patch to make production worse than it already was).

Even with the above considerations, some on the team reacted with horror…”What? No Testing?”. When I mentioned it had been tested by a developer and I was comfortable with said test, the response was still “A tester needs to test it”.

After convincing the process hawks it was not feasible for a tester to test, our next bottleneck was deployment. Some on the team insisted the bits go to a QA environment first, even though it would not be tested. This was to keep the bits in sync across environments. I agree with keeping the bits in sync, but how about worrying about that once we get our users safely through their crisis!

As I watched the email thread explode with process commentary and waited for the fix to jump through the hoops, I also listened to people who were in touch with the users. The users were escalating the severity of their crisis and reminding us of its urgency.

I believe those who insist everything must be tested by a tester do us a dis-service by making our job a thoughtless process instead of a sapient service.

- As with fishing, testing without catching bugs can get boring. It’s important to let newbies catch some bugs.

- When someone finds a bug you missed, it’s humiliating. When the tables turn and you find the missed bug, maybe it’s time to set your ego aside and put your coaching hat on.

Testing is Intangible - Ask Testers What They Did

4 comments Posted by Eric Jacobson at Wednesday, March 09, 2011- How did George Clooney do?

- Was it slow paced?

- Was the story easy to follow?

- Any twists or unexpected events?

- Was the cinematography to your liking?

Test Automation Authoring Is Harder Than It sounds

5 comments Posted by Eric Jacobson at Friday, March 04, 2011Problem Steps Recorder – Windows 7 Test Tool

3 comments Posted by Eric Jacobson at Tuesday, February 22, 2011

Programmer Profiling - Don't Be Afraid To Use It

5 comments Posted by Eric Jacobson at Friday, February 11, 2011- The TSA protects passengers by finding bombs among 3 million people.

- I protect users by finding bugs among 3 million lines of code.

- If I ask ProgrammerA how she tested something and she shows me a set of sound unit tests, and a little custom application she wrote to help her test better, I gain a certain level of confidence in her code.

- On the other hand, if I ask ProgrammerB how she tested something and she shrugs and says “that’s your job”, I gain a different level of confidence in her code.

RSS

RSS