Why Integration Testing is Difficult

6 comments Posted by Eric Jacobson at Wednesday, September 18, 2013What is the relationship between these two objects?

How about these two?

This, I’m afraid, is how testers (myself included) often see software modules…like black boxes. Their relationships are hidden from us. We know the programmer just changed something related to the seeds inside the orange, so we ask ourselves, “How could changing the seeds inside the orange affect the toaster?”. Hmmmm. “Well, it sure seems like it couldn’t”. Then, after a deployment, we’re shocked to discover the toaster starts burning all the toast.

Why didn’t the programmer warn us? Well, just because the programmer understands the innards of the orange, doesn’t mean they understand the innards of the toaster. In fact, based on my experiences, if there is enough work to do on the orange, the orange programmer will happily take it over learning to code for the toaster.

So here we are, left with nothing better to do than regression test the toaster, the jointer, and the flash light, every time the orange changes. No wonder we spend so much time regression testing.

In conclusion, maybe the more we learn about the architecture behind our software system, the fewer regression tests we’ll need to execute. Instead, we’ll better focus our testing and make the invisible relationships visible for the rest of the team…before development even begins.

For most of us, testing for “coolness” is not at the top of our quality list. Our users don’t have to buy what we test. Instead, they get forced to use it by their employer. Nevertheless, coolness can’t hurt.

As far as testing for it…good luck. It does not appear to be as straightforward as some may think.

I attended a mini-UX Conference earlier this week and saw Karen Holtzblatt, CEO and founder of InContext, speak. Her keynote was the highlight of the conference for me, mostly because she was fun to watch. She described the findings of 90 interviews and 2000 survey results, where her company asked people to show them “cool” things and explain why they considered them cool.

Her conclusion was that software aesthetics are way less important than the following four aspects:

- Accomplishments – When using your software, people need to feel a sense of accomplishment without disrupting the momentum of their lives. They need to feel like they are getting something done that was otherwise difficult. They need to do this without giving up any part of their life. Example: Can they accomplish something while waiting in line?

- Connection – When using your software, they should be motivated to connect with people they actually care about (e.g., not Facebook friends). These connections should be enriched in some manner. Example: Were they able to share it with Mom? Did they talk about it over Thanksgiving dinner?

- Identity - When using your software, they should feel like they’re not alone. They should be asking themselves, “Who am I?”, “Do I fit in with these other people?”. They should be able to share their identity with joy.

- Sensation – When using your software, they should experience a core sensory pleasure. Examples: Can they interact with it in a fresh way via some new interface? Can they see or hear something delightful?

Here are a few other notes I took:

- Modern users have no tolerance for anything but the most amazing experience.

- The app should help them get from thought to action, nothing in between.

- Users expect software to gather all the data they need and think for them.

I guess maybe I’ll think twice the next time I feel like saying, “just publish the user procedures, they’ll get it eventually”.

Crowdsource Testing – Acting vs. Using

3 comments Posted by Eric Jacobson at Thursday, December 15, 2011My tech department had a little internal one day idea conference this week. I wanted to keep it from being a programmer fest so I pitched Crowdsource Testing as a topic. Although I knew nothing about it, I had just seen James Whittaker’s STARwest Keynote, and the notion of having non-testers test my product had been bouncing around my brain.

With a buzzword like “crowdsource” I guess I shouldn’t be surprised they picked it. That and maybe I was one of their only submissions.

Due to several personal conflicts I had little time to research, but I did spend about 12 hours alone with my brain in a long car ride. During that time I came up with a few ideas, or at least built on other people’s.

- Would you hang this on your wall?

…only a handful of people raised their hands. But this is a Jackson Pollock! Worth millions! It’s quality art! Maybe software has something in common with art. - Two testing challenges that can probably not be solved by skilled in-house testers are:

- Quality is subjective. (I tip my hat to Michael Bolton and Jerry Weinberg)

- There are an infinite amount of tests to execute. (I tip my hat to James Bach and Robert Sabourin)

- There is a difference between acting like a user and using software. (I tip my hat to James Whittaker). The only way to truly measure software quality is to use the software, as a user. How else can you hit just the right tests?

- Walking through the various test levels: Skilled testers act. Team testers act (this is when non-testers on your product team help you test). User acceptance testing is acting (they are still pretending to do some work). Dogfooders…ah yes! Dogfooders are finally not acting. This is the first level to cross the boundary between pretending and acting. Why not stop here? It’s still weak in the infinite tests area.

- The next level is crowdsource testing. Or is it crowdsourced testing? I see both used frequently. I can think of three ways to implement crowdsource testing:

- Use a crowdsource testing company.

- Do a limited beta release.

- Do a public beta release.

- Is crowdsource testing acting or using? If you outsource your crowdsource testing to a company (e.g., uTest, Mob4hire, TopCoder), now you’re hiring actors again. However, if you do a limited or public beta release, you’re asking people to use your product. See the difference?

- Beta…is a magic word. It’s like stamping “draft” on the report you’re about to give your boss. It also says, this product is alive! New! Exciting! Maybe cutting edge. It’s fixable still. We won’t hang you out to dry. It can only get better!

- Who should facilitate crowdsource testing? The skilled in-house tester. Maybe crowdsource testing is just another tool in their toolbox. If they spent this much time executing their own tests…

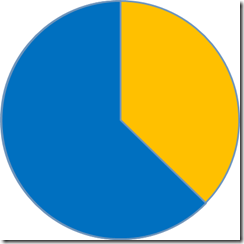

…maybe instead, they could spend this much time (blue) executing their own tests, and this much time (yellow) collecting test information from their crowd… - How do we get feedback from the crowd? (from least cool to coolest) The crowd calls our tech support team, virtual feedback meetings, we go find the feedback on twitter etc., the crowd provides feedback via tightly integrated feedback options on the product’s UI, we programmatically collect it in a stealthy way.

- How do we enlist the crowd? Via creative spin and marketing (e.g., follow Hipster’s example).

- Which products do we crowdsource test? NOT the mission critical ones. We crowdsource the trivial products like entertainment or social products.

- How far can we take this? Far. We can ask the crowd to build the product too.

- What can we do now?

- BA’s: determine beta candidate features.

- Testers: spend less time testing trivial features. Outsource those to the crowd.

- Programmers: Decouple trivial features from critical features.

- UX Designers: Help users find beta features and provide feedback.

- Managers: Encourage more features to get released earlier.

- Executives: Hire a crowdsource test czar.

There it is in a nutshell. Of course, the live presentation includes a bunch of fun extra stuff like the 1978 Alpo commercial that may have inspired the term “dogfooding”, as well as other theories and silliness.

RSS

RSS